We deliver! Get curated industry news straight to your inbox. Subscribe to Adweek newsletters.

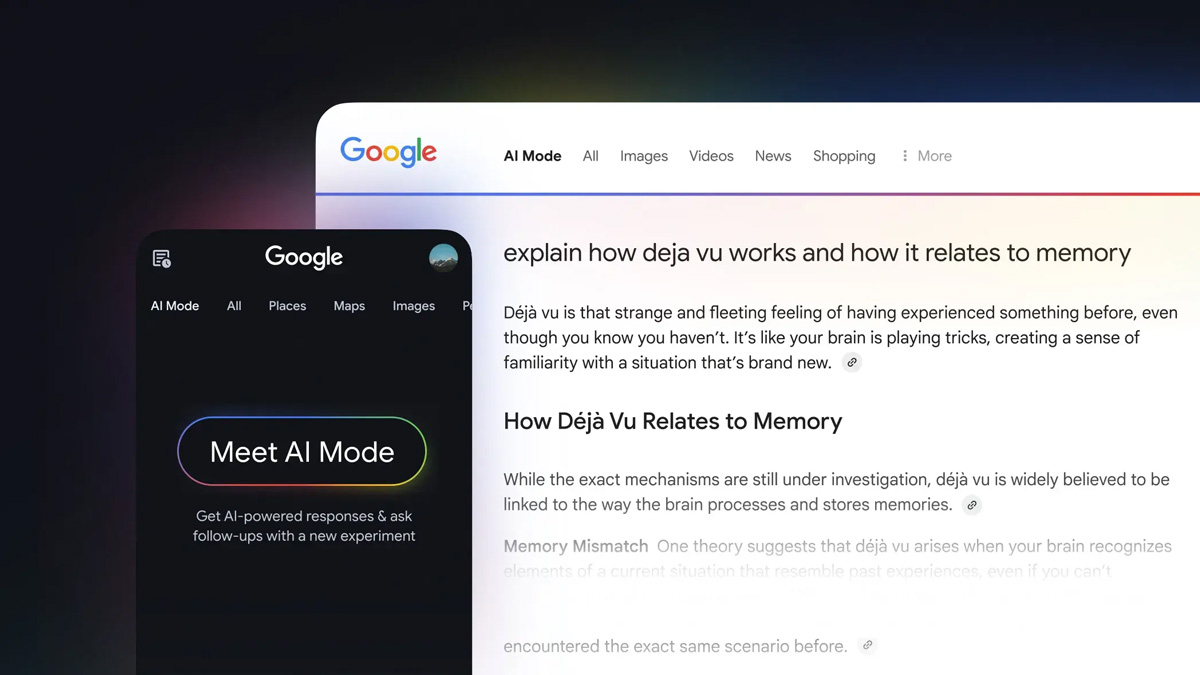

Google is expanding access to its AI Mode feature on Search, and introducing more sophisticated AI capabilities into the platform.

Google says that the changes are designed to improve the accuracy and effectiveness of AI-generated search results and improve the user experience.

The updates to AI Mode—the experimental AI-backed Google Search feature designed for nuanced or multi-step queries unveiled in early March—were announced at Google’s tentpole developer conference, Google I/O, at the company’s headquarters in Silicon Valley today.

AI Mode will expand to all U.S. users for the first time. The system, as well as AI Overviews—the automated summaries that appear atop some Google search results—will now be powered by Gemini 2.5, Google’s suite of advanced reasoning models. AI Overviews will also expand to new markets, Google said, becoming available in more than 200 countries and 40 languages.

The platform will also get sharper and more user-friendly thanks to new capabilities to be folded in over the coming months, said Demis Hassabis, the CEO and cofounder of Google DeepMind, onstage at I/O today.

For one, AI Mode will soon have a capability called ‘Deep Search,’ akin to ChatGPT’s ‘Deep Research’ mode. Rather than simply searching an index, Deep Search scans multiple sites simultaneously and conducts automated follow-up searches in response to its findings, ultimately producing a thorough, heavily-cited report in mere minutes.

“It will do maybe hundreds of queries and searches for you and browse the web to understand all the facets of your question,” Robby Stein, vp of product at Google Search, told ADWEEK. “Each section has links to dive in and learn more, and it brings all that knowledge and understanding into a long format. So you can be an expert in anything that you’re looking up or wanting to research.”

Google is also adding ‘live’ capabilities into AI Mode, enabling users to converse in a real-time, two-way dialogue with the system about their real-world environment, even using their device’s camera to show the platform what they’re seeing. In short, it’s like having a live phone call or video conference with the AI-powered search platform.

AI gets more agentic

Agentic capabilities will be integrated into Google’s AI Mode, too. With these offerings, users can assign tasks that the platform can complete on its own by navigating the web and actioning autonomously. For example, users can book haircuts, make restaurant reservations, and more.

Further, AI Mode will soon be able to produce data visualizations. The system can analyze users’ data sets and configure insights into easy-to-digest graphics for specific user queries, beginning with finance and sports.

“AI systems that were born out of text and chat are becoming more visual and expressive,” Stein said.

Finally, as part of its efforts to expand its commerce business, Google is also introducing shopping capabilities in AI Mode. Using data from Google’s Shopping Graph, which includes more than 50 billion product listings globally, the shopping experience will surface products that fit users’ specific queries.

“For example, [maybe] I have a light gray couch and I’m looking for a rug to brighten the room,” explained Lilian Rincon, vp of product at Google Shopping, in a demo with ADWEEK. After inputting this kind of query, a user can expect to see a “mosaic of dynamic, browsable, and personalized products,” she said. Plus, users can add on additional parameters to tailor their results even more from the results that are initially surfaced.

The announcements are part of Google’s larger AI investment strategy—at its Q1 earnings call in late April, Alphabet Chief Financial Officer Anat Ashkenazi reaffirmed the company’s plan to shell out around $75 billion this year on the technology.

Alphabet posted year-over-year revenues of $90.23 billion for the quarter, outpacing analysts’ projections.